Conflict zones often provide an opportunity to test and deploy new technologies. Such is the case with facial recognition, which Ukraine is set to use for identification purposes. For better or for worse?

In his novel 1984, George Orwell imagined the character “Big Brother,” whose watchful eye constantly surveils the population. In 2022, Big Brother has taken the form of facial recognition, which is gradually spreading within our societies, despite its controversial reputation.

In the context of the war between Russia and Ukraine, this technology—made available to Ukrainian authorities by the start-up Clearview AI—is intended to identify refugees at checkpoints, to recognize individuals killed in combat, and to detect Russian agents attempting to infiltrate. Clearview AI’s search engine relies on a database of more than ten billion images, sourced in particular from social media.

Between biometrics and Artificial Intelligence.

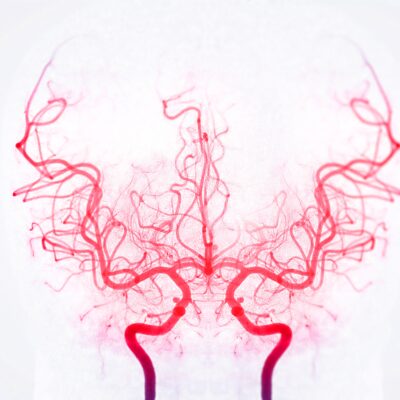

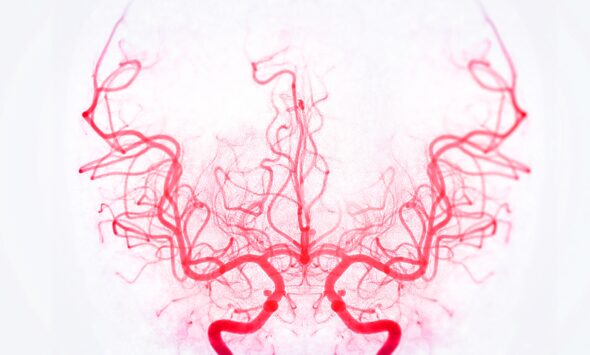

Facial recognition analyzes the distinct characteristics of a face by combining several technologies: biometrics, Artificial Intelligence, and 2D or 3D mapping. In practice, a face is first isolated from a photograph or video and its specific features examined (such as the distance between the eyes, the size and position of the ears, or the shape of the lips). Facial recognition software can process up to 80 of these characteristics, also known as nodal points. These data are then used to generate a numerical code or “facial imprint” unique to each individual, much like fingerprints. This imprint can subsequently be compared against a database containing millions of other similarly mapped faces. Thanks to artificial neural networks modeled on the human brain, deep learning has enabled algorithms to acquire the ability to recognize human faces and, in principle, to match a proposed imprint with the corresponding photograph without error.

An exceptional tool in the field of security.

Even if it is not always apparent, the use of facial recognition technology is steadily expanding. Authorities are increasingly employing it, particularly for surveillance in airports and at border crossings.

In the United States, where no law regulates the collection and storage of personal data, the FBI already maintains a database of 650 million images, sourced from airports and social media. In many U.S. cities, law enforcement officers are also equipped with body cameras capable of real-time facial recognition. A simple photograph of a driver or a suspect can be cross-checked against available databases to determine whether that individual is on record.

In France, police forces have access to a database incorporating facial recognition known as TAJ (Traitement des Antécédents Judiciaires). It consolidates information drawn from investigative and intervention reports and currently contains more than 8 million photographs. Access to this database is legally regulated and restricted to criminal investigations, inquiries into misdemeanors, and the search for missing persons. In addition, the PARAFE facial recognition system has been deployed in a number of strategically sensitive train stations and airports.This technology is undeniably a valuable tool for securing major events, tracking fugitives, or identifying dangerous individuals. However, France remains cautious about its use. While the CNIL (Commission Nationale de l’Informatique et des Libertés) authorized an experimental trial in 2019 during the Nice Carnival, the organizers of the 2024 Olympic Games appear to have definitively ruled out its use as a security measure.

Privacy under surveillance?

Such caution is far from whimsical. Beyond the obvious difficulties in regulating its use and preventing abuses in conflict zones, facial recognition immediately raises a fundamental question: how can individuals protect their privacy and personal data if their faces risk being incorporated into such software without their knowledge?

Indeed, many companies and online platforms have already integrated facial recognition into their technologies. Apple, for instance, uses it to unlock smartphones, while Twitter, Facebook, and Google have also implemented similar systems. This has already enabled Clearview AI—the very company now equipping Ukraine—to harvest several billion photographs, videos, and personal data. The consequences of such practices remain difficult to fully assess.

Sources :

https://www.cnil.fr/fr/reglement-europeen-protection-donnees

Tous droits réservés - © 2026 Forenseek