By Yann CHOVORY, Engineer in AI Applied to Criminalistics (Institut Génétique Nantes Atlantique – IGNA). On a crime scene, every minute counts. Between identifying a fleeing suspect, preventing further wrongdoing, and managing the time constraints of an investigation, case handlers are engaged in a genuine race against the clock. Fingerprints, gunshot residues, biological traces, video surveillance, digital data… all these clues must be collected and quickly analyzed, or there is a risk that the case will collapse for lack of usable evidence in time. Yet overwhelmed by the ever-growing mass of data, forensic laboratories are struggling to keep pace.

Analyzing evidence with speed and accuracy

In this context, artificial intelligence (AI) establishes itself as an indispensable accelerator. Capable of processing in a few hours what would take weeks to analyze manually, it optimises the use of clues by speeding up their sorting and detecting links imperceptible to the human eye. More than just a time-saver, it also improves the relevance of investigations: swiftly cross-referencing databases, spotting hidden patterns in phone call records, comparing DNA fragments with unmatched precision. AI thus acts as a tireless virtual analyst, reducing the risk of human error and offering new opportunities to forensic experts.

But this technological revolution does not come without friction. Between institutional scepticism and operational resistance, its integration into investigative practices remains a challenge. My professional journey, marked by a persistent quest to integrate AI into scientific policing, illustrates this transformation—and the obstacles it faces. From a marginalised bioinformatician to project lead for AI at IGNA, I have observed from within how this discipline, long grounded in traditional methods, is adapting—sometimes under pressure—to the era of big data.

The risk of human error is reduced and the reliability of identifications increased

Concrete examples: AI from the crime scene to the laboratory

AI is already making inroads in several areas of criminalistics, with promising results. For example, AFIS (Automated Fingerprint Identification System) fingerprint recognition systems now incorporate machine learning components to improve matching of latent fingerprints. The risk of human error is reduced and the reliability of identifications increased [1]. Likewise, in ballistics, computer vision algorithms now automatically compare the striations on a projectile with markings of known firearms, speeding the work of a firearms expert. Tools are also emerging to interpret bloodstains on a scene: machine learning1 models can help reconstruct the trajectory of blood droplets and thus the dynamics of an assault or violent event [2]. These examples illustrate how AI is integrating into the forensic expert’s toolkit, from crime scene image analysis to the recognition of complex patterns.But it is perhaps in forensic genetics that AI currently raises the greatest hopes. DNA analysis labs process thousands of genetic profiles and samples, with deadlines that can be critical. AI offers a considerable time-gain and enhanced accuracy. As part of my research, I contributed to developing an in-house AI capable of interpreting 86 genetic profiles in just three minutes [3]—a major advance when analyzing a complex profile may take hours. Since 2024, it has autonomously handled simple profiles, while complex genetic profiles are automatically routed to a human expert, ensuring effective collaboration between automation and expertise. The results observed are very encouraging. Not only is the turnaround time for DNA results drastically reduced, but the error rate also falls thanks to the standardization introduced by the algorithm.

AI does not replace humans but complements them

Another promising advance lies in enhancing genetic DNA-based facial composites. Currently, this technique allows estimating certain physical features of an individual (such as eye color, hair color, or skin pigmentation) from their genetic code, but it remains limited by the complexity of genetic interactions and uncertainties in predictions. AI could revolutionise this approach by using deep learning models trained on vast genetic and phenotypic databases, thereby refining these predictions and generating more accurate sketches. Unlike classical methods, which rely on statistical probabilities, an AI model could analyse millions of genetic variants in a few seconds and identify subtle correlations that traditional approaches do not detect. This prospect opens the way to a significant improvement in the relevance of DNA sketches, facilitating suspect identification when no other usable clues are available. The Forenseek platform has explored current advances in this area, but AI has not yet been fully exploited to surpass existing methods [5]. Its integration could therefore constitute a major breakthrough in criminal investigations.

It is important to emphasize that in all these examples, AI does not replace the human but complements them. At IRCGN (French National Gendarmerie Criminal Research Institute) cited above, while the majority of routine, good-quality DNA profiles can be handled automatically, regular human quality control remains: every week, a technician randomly checks cases processed by AI, to ensure no drift has occurred [3]. This human-machine collaboration is key to successful deployment, as the expertise of the forensic specialists remains indispensable to validate and finely interpret the results, especially in complex cases.

Algorithms Trained on Data: How AI “Learns” in Forensics

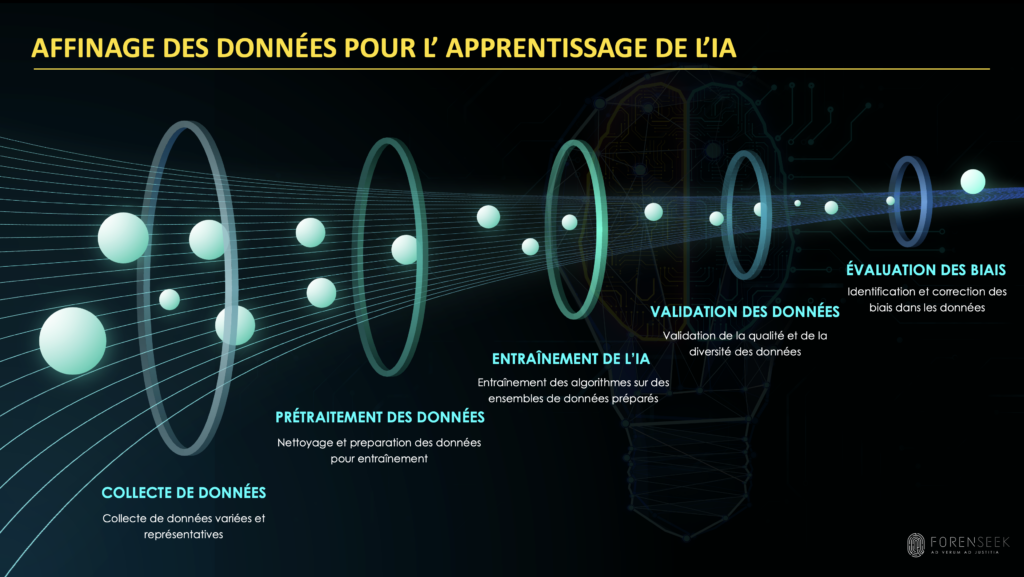

The impressive performance of AI in forensics relies on one crucial resource: data. For a machine learning algorithm to identify a fingerprint or interpret a DNA profile, it first needs to be trained on numerous examples. In practical terms, we provide it with representative datasets, each containing inputs (images, signals, genetic profiles, etc.) associated with an expected outcome (the identity of the correct suspect, the exact composition of the DNA profile, etc.). By analyzing thousands—or even millions—of these examples, the machine adjusts its internal parameters to best replicate the decisions made by human experts. This is known as supervised learning, since the AI learns from cases where the correct outcome is already known. For example, to train a model to recognize DNA profiles, we use data from solved cases where the expected result is clearly established.

an AI’s performance depends on the quality of the data that trains it.

The larger and more diverse the training dataset, the better the AI will be at detecting reliable and robust patterns. However, not all data is equal. It must be of high quality (e.g., properly labeled images, DNA profiles free from input errors) and cover a wide enough range of situations. If the system is biased by being exposed to only a narrow range of cases, it may fail when confronted with a slightly different scenario. In genetics, for instance, this means including profiles from various ethnic backgrounds, varying degrees of degradation, and complex mixture configurations so the algorithm can learn to handle all potential sources of variation.

Transparency in data composition is essential. Studies have shown that some forensic databases are demographically unbalanced—for example, the U.S. CODIS database contains an overrepresentation of profiles from African-American individuals compared to other groups [6]. A model naively trained on such data could inherit systemic biases and produce less reliable or less fair results for underrepresented populations. It is therefore crucial to monitor training data for bias and, if necessary, to correct it (e.g., through balanced sampling, augmentation of minority data) in order to achieve fair and equitable learning.

Data Preprocessing: Cleaning and preparing data for training

AI Training: Training algorithms on prepared datasets

Data Validation: Verifying the quality and diversity of the data

Bias Evaluation: Identifying and correcting biases in the datasets

Technically, training an AI involves rigorous steps of cross-validation and performance measurement. We generally split data into three sets: one for training, another for validation during development (to adjust the parameters), and a final test set to objectively evaluate the model. Quantitative metrics such as accuracy, recall (sensitivity), or error curves make it possible to quantify how reliable the algorithm is on data it has never seen [6]. For example, one can check that the AI correctly identifies a large majority of perpetrators from traces while maintaining a low rate of false positives. Increasingly, we also integrate fairness and ethical criteria into these evaluations: performance is examined across demographic groups or testing conditions (gender, age, etc.), to ensure that no unacceptable bias remains [6]. Finally, compliance with legal constraints (such as the GDPR in Europe, which regulates the use of personal data) must be built in from the design phase of the system [6]. That may involve anonymizing data, limiting certain sensitive information, or providing procedures in case an ethical bias is detected.

Ultimately, an AI’s performance depends on the quality of the data that trains it. In the forensic field, that means algorithms “learn” from accumulated human expertise. Every algorithmic decision implies the experience of hundreds of experts who provided examples or tuned parameters. It is both a strength – capitalizing on a vast knowledge base – and a responsibility: to carefully select, prepare, and control the data that will feed the artificial intelligence.

Technical and operational challenges for integrating AI into forensic science

Technical and operational challenges for integrating AI into forensic science

While AI promises substantial gains, its concrete integration in the forensic field faces many challenges. It is not enough to train a model in a laboratory: one must also be able to use it within the constrained framework of a judicial investigation, with all the reliability requirements that entails. Among the main technical and organisational challenges are:

- Access to data and infrastructure: Paradoxically, although AI requires large datasets to learn, it can be difficult to gather sufficient data in the specific forensic domain. DNA profiles, for example, are highly sensitive personal data, protected by law and stored in secure, sequestered databases. Obtaining datasets large enough to train an algorithm may require complex cooperation between agencies or the generation of synthetic data to fill gaps. Additionally, computing tools must be capable of processing large volumes of data in reasonable time — which requires investment in hardware (servers, GPU2s for deep learning3) and specialized software. Some national initiatives are beginning to emerge to pool forensic data securely, but this remains an ongoing project.

- Quality of annotations and bias: The effectiveness of AI learning depends on the quality of the annotations in training datasets. In many forensic areas, establishing « ground truth » is not trivial. For example, to train an algorithm to recognize a face in surveillance video, each face must be correctly identified by a human first — which can be difficult if the image is blurry or partial. Similarly, labeling data sets of footprints, fibers, or fingerprints requires meticulous work by experts and sometimes involves subjectivity. If the training data include annotation errors or historical biases, the AI will reproduce them [6]. A common bias is demographic representativeness noted above, but there may be others. For instance, if a weapon detection model is trained mainly on images of weapons indoors, it may perform poorly for detecting a weapon outdoors, in rain, etc. The quality and diversity of annotated data are therefore a major technical issue. This implicates establishing rigorous data collection and annotation protocols (ideally standardized at the international level), as well as ongoing monitoring to detect model drift (overfitting to certain cases, performance degradation over time, etc.). This validation relies on experimental studies comparing AI performance to that of human experts. However, the complexity of homologation procedures and procurement often slows adoption, delaying the deployment of new tools in forensic science by several years.

- Understanding and Acceptance by Judicial Actors: Introducing artificial intelligence into the judicial process inevitably raises the question of trust. An investigator or a laboratory technician, trained in conventional methods, must learn to use and interpret the results provided by AI. This requires training and a gradual cultural shift so that the tool becomes an ally and not an “incomprehensible black box.” More broadly, judges, attorneys, and jurors who will have to discuss this evidence must also grasp its principles. Yet explaining the inner workings of a neural network or the statistical meaning of a similarity score is far from simple. We sometimes observe misunderstanding or suspicion from certain judicial actors toward these algorithmic methods [6]. If a judge does not understand how a conclusion was reached, they may be inclined to reject it or assign it less weight, out of caution. Similarly, a defence lawyer will legitimately scrutinize the weaknesses of a tool they do not know, which may lead to judicial debates over the validity of the AI. A major challenge is thus to make AI explainable (the “XAI” concept—eXplainable Artificial Intelligence), or at least to present its results in a comprehensible format and pedagogically acceptable to a court. Without this, integrating AI risks facing resistance or sparking controversy in trials, limiting its practical contribution.

- Regulatory Framework and Data Protection: Finally, forensic sciences operate within a strict legal framework, notably regarding personal data (DNA profiles, biometric data, etc.) and criminal procedure. The use of AI must comply with these regulations. In France, the CNIL (Commission Nationale de l’Informatique et des Libertés) keeps watch and can impose restrictions if an algorithmic processing harms privacy. For example, training an AI on nominal DNA profiles without a legal basis would be inconceivable. Innovation must therefore remain within legal boundaries, imposing constraints from the design phase of projects. Another issue concerns trade secrecy surrounding certain algorithms in judicial contexts: if a vendor refuses to disclose the internal workings of its software for intellectual property reasons, how can the defence or the judge ensure its reliability? Recent cases have shown defendants convicted on the basis of proprietary software (e.g., DNA analysis) without the defence being able to examine the source code used [7]. These situations raise issues of transparency and rights of defence. In the United States, a proposed law titled Justice in Forensic Algorithms Act aims precisely to ensure that trade secrecy cannot prevent the examination by experts of the algorithms used in forensics, in order to guarantee fairness in trials. This underlines the necessity of adapting regulatory frameworks to these new technologies.

Lack of Cooperation slows the development of powerful tools and limits their adoption in the field.

- Another more structural obstacle lies in the difficulty of integrating hybrid profiles within forensic institutions, at least in France. Today, competitive examinations and recruitment often remain compartmentalised between different specialties, limiting the emergence of experts with dual expertise. For instance, in forensic police services, entrance exams for technicians or engineers are divided into distinct specialties such as biology or computer science, without pathways to recognize combined expertise in both fields. This institutional rigidity slows the integration of professionals capable of bridging between domains and fully exploiting the potential of AI in criminalistics. Yet current technological advances show that the analysis of biological traces increasingly relies on advanced digital tools. Faced with this evolution, greater flexibility in recruitment and training of forensic experts will be necessary to meet tomorrow’s challenges.

AI in forensics must not become a matter of competition or prestige among laboratories, but a tool put at the service of justice and truth, for the benefit of investigators and victims.

- A further major barrier to innovation in forensic science is the compartmentalization of efforts among different stakeholders, who often work in parallel on identical problems without pooling their advances. This lack of cooperation slows the development of effective tools and limits their adoption in the field. However, by sharing our resources—whether databases, methodologies, or algorithms—we could accelerate the production deployment of AI solutions and guarantee continuous improvement based on collective expertise. My experience across different French laboratories (the Lyon Scientific Police Laboratory (Service National de Police Scientifique – SNPS), the Institut de Recherche Criminelle de la Gendarmerie Nationale (IRCGN), and now the Nantes Atlantique Genetic Institute (IGNA)) allows me to perceive how much this fragmentation hampers progress, even though we pursue a common goal: improving the resolution of investigations. This is why it is essential to promote open-source development when possible and to create platforms of collaboration among public and judicial entities. AI in forensics must not be a matter of competition or prestige among laboratories, but a tool in the service of justice and truth, for the benefit of investigators and victims alike.

Ethical and Legal Stakes: Innovating Without Abandoning Safeguards

The challenges discussed above all have technical dimensions, but they are closely intertwine with fundamental ethical and legal questions. From an ethical standpoint, the absolute priority is to avoid injustice through the use of AI. We must prevent at all costs that a poorly designed algorithm leads to someone’s wrongful indictment or, conversely, the release of a guilty party. This involves mastering biases (to avoid discrimination against certain groups), transparency (so that every party in a trial can understand and challenge algorithmic evidence), and accountability for decisions. Indeed, who is responsible if an AI makes an error? The expert who misused it, the software developer, or no one because “the machine made a mistake”? This ambiguity is unacceptable in justice: it is essential to always keep human expertise in the loop, so that a final decision—whether to accuse or exonerate—is based on human evaluation informed by AI, and not on the opaque verdict of an automated system.

On the legal side, the landscape is evolving to regulate the use of AI. The European Union, in particular, is finalizing an AI Regulation (AI Act) which will be the world’s first legislation establishing a framework for the development, commercialization, and use of artificial intelligence systems [8]. Its goal is to minimize risks to safety and fundamental rights by imposing obligations depending on the level of risk of the application (and forensic or criminal justice applications will undoubtedly be categorized among the most sensitive). In France, the CNIL has published recommendations emphasizing that innovation can be reconciled with respect for individual rights during the development of AI solutions [9]. This involves, for example, compliance with the GDPR, limitation of purposes (i.e. training a model only for legitimate and clearly defined objectives), proportionality in data collection, and prior impact assessments for any system likely to significantly affect individuals. These safeguards aim to ensure that enthusiasm for AI does not come at the expense of the fundamental principles of justice and privacy.

Encouraging Innovation While Demanding Scientific Validation and Transparency

A delicate balance must therefore be struck between technological innovation and regulatory framework. On one hand, overly restricting experimentation and adoption of AI in forensics could deprive investigators of tools potentially decisive for solving complex cases. On the other, leaving the field unregulated and unchecked would risk judicial errors or violations of rights. The solution likely lies in a measured approach: encouraging innovation while demanding solid scientific validation and transparency in methods. Ethics committees and independent experts can be involved to audit algorithms, verify that they comply with norms, and that they do not replicate problematic biases. Furthermore, legal professionals must be informed and trained on these new technologies so they can meaningfully debate their probative value in court. A judge trained in the basic concepts of AI will be better placed to understand the evidentiary weight (and limitations) of evidence derived from an algorithm.

Conclusion: The Future of forensics in the AI Era

Artificial intelligence is set to deeply transform forensics, offering investigators analysis tools that are faster, more accurate, and capable of handling volumes of data once considered inaccessible. Whether it is sifting through gigabytes of digital information, comparing latent traces with improved reliability, or untangling complex DNA profiles in a matter of minutes, AI opens new horizons for solving investigations more efficiently.

But this technological leap comes with crucial challenges. Learning techniques, quality of databases, algorithmic bias, transparency of decisions, regulatory framework: these are all stakes that will determine whether AI can truly strengthen justice without undermining it. At a time when public trust in digital tools is more than ever under scrutiny, it is imperative to integrate these innovations with rigor and responsibility.The future of AI in forensics will not be a confrontation between machine and human, but a collaborative work in which human expertise remains central. Technology may help us see faster and farther, but interpretation, judgment and decision-making will remain in the hands of forensic experts and the judicial authorities. Thus, the real question may not be how far AI can go in forensic science, but how we will frame it to ensure that it guarantees ethical and equitable justice. Will we be able to harness its power while preserving the very foundations of a fair trial and the right to defence?

The revolution is underway. It is now up to us to make it progress, not drift.

Bibliography

[1] : Océane DUBOUST. L’IA peut-elle aider la police scientifique à trouver des similitudes dans les empreintes digitales ? Euronews, 12/01/2024 [vue le 15/03/2025] https://fr.euronews.com/next/2024/01/12/lia-peut-elle-aider-la-police-scientifique-a-trouver-des-similitudes-dans-les-empreintes-d#:~:text=,il

[2] : International Journal of Multidisciplinary Research and Publications. The Role of Artificial Intelligence in Forensic Science: Transforming Investigations through Technology. Muhammad Arjamand et al. Volume 7, Issue 5, pp. 67-70, 2024. Disponible sur : http://ijmrap.com/ [vue le 15/03/2025]

[3] : Gendarmerie Nationale. Kit universel, puce RFID, IA : le PJGN à la pointe de la technologie sur l’ADN. Mis à jour le 22/01/2025 et disponible sur : https://www.gendarmerie.interieur.gouv.fr/pjgn/recherche-et-innovation/kit-universel-puce-rfid-ia-le-pjgn-a-la-pointe-de-la-technologie-sur-l-adn [vue le 15/03/2025]

[4] : Michelle TAYLOR. EXCLUSIVE: Brand New Deterministic Software Can Deconvolute a DNA Mixture in Seconds. Forensic Magazine, 29/03/022. Disponible sur : https://www.forensicmag.com [vue le 15/03/2025]

[5] : Sébastien AGUILAR. L’ADN à l’origine des portraits-robot ! Forenseek, 05/01/2023. Disponible sur : https://www.forenseek.fr/adn-a-l-origine-des-portraits-robot/ [vue le 15/03/2025]

[6] : Max M. Houck, Ph.D. CSI/AI: The Potential for Artificial Intelligence in Forensic Science. iShine News, 29/10/2024. Disponible sur : https://www.ishinews.com/csi-ai-the-potential-for-artificial-intelligence-in-forensic-science/ [vue le 15/03/2025]

[7] : Mark Takano. Black box algorithms’ use in criminal justice system tackled by bill reintroduced by reps. Takano and evans. Takano House, 15/02/2024. Disponible sur : https://takano.house.gov/newsroom/press-releases/black-box-algorithms-use-in-criminal-justice-system-tackled-by-bill-reintroduced-by-reps-takano-and-evans [vue le 15/03/2025]

[8] : Mon Expert RGPD. Artificial Intelligence Act : La CNIL répond aux premières questions. Disponible sur : https://monexpertrgpd.com [vue le 15/03/2025]

[9] : CNIL. Les fiches pratiques IA. Disponible sur : https://www.cnil.fr [vue le 15/03/2025]

Définitions :

- GPU (Graphics Processing Unit)

A GPU is a specialized processor designed to perform massively parallel computations. Originally developed for rendering graphics, it is now widely used in artificial intelligence applications, particularly for training deep learning models. Unlike CPUs (central processing units), which are optimized for sequential, general-purpose tasks, GPUs contain thousands of cores optimized to execute numerous operations simultaneously on large datasets - Machine Learning

Machine learning is a branch of artificial intelligence that enables computers to learn from data without being explicitly programmed. It relies on algorithms capable of detecting patterns, making predictions, and improving performance through experience. - Deep Learning

Deep learning is a subfield of machine learning that uses artificial neural networks composed of multiple layers to model complex data representations. Inspired by the human brain, it allows AI systems to learn from large volumes of data and enhance their performance over time. Deep learning is especially effective for processing images, speech, text, and complex signals, with applications in computer vision, speech recognition, forensic science, and cybersecurity.

Tous droits réservés - © 2026 Forenseek